OSM’s Prometheus and Grafana stack

Overview

The following doc walks through the process of creating a simple Prometheus and Grafana stack to enable observability and monitoring of OSM’s service mesh.

This setup is not meant for production, for production-grade deployments one should refer to Prometheus Operator.

Deploying a Prometheus Instance

The easiest way to deploy a Prometheus instance is through Helm:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install stable prometheus-community/prometheus

If this step succeeded, helm will output some important information, some of which we will be interested in:

The Prometheus server can be accessed via port 80 on the following DNS name from within your cluster:

stable-prometheus-server.metrics.svc.cluster.local

Keep the DNS name for later, we will need it to add Prometheus as Data source for Grafana.

In the next step, we need to configure the targets to scrape. OSM uses additional re/labeling and endpoint configuration, we we strongly recommend replacing Prometheus configuration or otherwise the OSM Grafana dashboard might show incomplete data.

kubectl get configmap

NAME DATA AGE

stable-prometheus-alertmanager 1 22s

stable-prometheus-server 5 22s

Let’s replace the configuration of stable-prometheus-server

kubectl edit configmap stable-prometheus-server

Now with your editor of choice, look for the prometheus.yaml key entry and replace it with OSM’s scraping config (proceed with care while formatting the yaml file):

(....)

prometheus.yml: |

global:

scrape_interval: 10s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

# TODO need to remove this when the CA and SAN match

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

metric_relabel_configs:

- source_labels: [__name__]

regex: '(apiserver_watch_events_total|apiserver_admission_webhook_rejection_count)'

action: keep

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

metric_relabel_configs:

- source_labels: [__name__]

regex: '(envoy_server_live|envoy_cluster_upstream_rq_xx|envoy_cluster_upstream_cx_active|envoy_cluster_upstream_cx_tx_bytes_total|envoy_cluster_upstream_cx_rx_bytes_total|envoy_cluster_upstream_cx_destroy_remote_with_active_rq|envoy_cluster_upstream_cx_connect_timeout|envoy_cluster_upstream_cx_destroy_local_with_active_rq|envoy_cluster_upstream_rq_pending_failure_eject|envoy_cluster_upstream_rq_pending_overflow|envoy_cluster_upstream_rq_timeout|envoy_cluster_upstream_rq_rx_reset|^osm.*)'

action: keep

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: source_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: source_pod_name

- regex: '(__meta_kubernetes_pod_label_app)'

action: labelmap

replacement: source_service

- regex: '(__meta_kubernetes_pod_label_osm_envoy_uid|__meta_kubernetes_pod_label_pod_template_hash|__meta_kubernetes_pod_label_version)'

action: drop

# for non-ReplicaSets (DaemonSet, StatefulSet)

# __meta_kubernetes_pod_controller_kind=DaemonSet

# __meta_kubernetes_pod_controller_name=foo

# =>

# workload_kind=DaemonSet

# workload_name=foo

- source_labels: [__meta_kubernetes_pod_controller_kind]

action: replace

target_label: source_workload_kind

- source_labels: [__meta_kubernetes_pod_controller_name]

action: replace

target_label: source_workload_name

# for ReplicaSets

# __meta_kubernetes_pod_controller_kind=ReplicaSet

# __meta_kubernetes_pod_controller_name=foo-bar-123

# =>

# workload_kind=Deployment

# workload_name=foo-bar

# deplyment=foo

- source_labels: [__meta_kubernetes_pod_controller_kind]

action: replace

regex: ^ReplicaSet$

target_label: source_workload_kind

replacement: Deployment

- source_labels:

- __meta_kubernetes_pod_controller_kind

- __meta_kubernetes_pod_controller_name

action: replace

regex: ^ReplicaSet;(.*)-[^-]+$

target_label: source_workload_name

- job_name: 'smi-metrics'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

metric_relabel_configs:

- source_labels: [__name__]

regex: 'envoy_.*osm_request_(total|duration_ms_(bucket|count|sum))'

action: keep

- source_labels: [__name__]

action: replace

regex: envoy_response_code_(\d{3})_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: response_code

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_(.*)_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: source_namespace

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_(.*)_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: source_kind

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_(.*)_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: source_name

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_(.*)_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: source_pod

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_(.*)_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_total

target_label: destination_namespace

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_(.*)_destination_name_.*_destination_pod_.*_osm_request_total

target_label: destination_kind

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_(.*)_destination_pod_.*_osm_request_total

target_label: destination_name

- source_labels: [__name__]

action: replace

regex: envoy_response_code_\d{3}_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_(.*)_osm_request_total

target_label: destination_pod

- source_labels: [__name__]

action: replace

regex: .*(osm_request_total)

target_label: __name__

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_(.*)_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: source_namespace

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_(.*)_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: source_kind

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_(.*)_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: source_name

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_(.*)_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: source_pod

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_(.*)_destination_kind_.*_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: destination_namespace

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_(.*)_destination_name_.*_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: destination_kind

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_(.*)_destination_pod_.*_osm_request_duration_ms_(bucket|sum|count)

target_label: destination_name

- source_labels: [__name__]

action: replace

regex: envoy_source_namespace_.*_source_kind_.*_source_name_.*_source_pod_.*_destination_namespace_.*_destination_kind_.*_destination_name_.*_destination_pod_(.*)_osm_request_duration_ms_(bucket|sum|count)

target_label: destination_pod

- source_labels: [__name__]

action: replace

regex: .*(osm_request_duration_ms_(bucket|sum|count))

target_label: __name__

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

metric_relabel_configs:

- source_labels: [__name__]

regex: '(container_cpu_usage_seconds_total|container_memory_rss)'

action: keep

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

(.....)

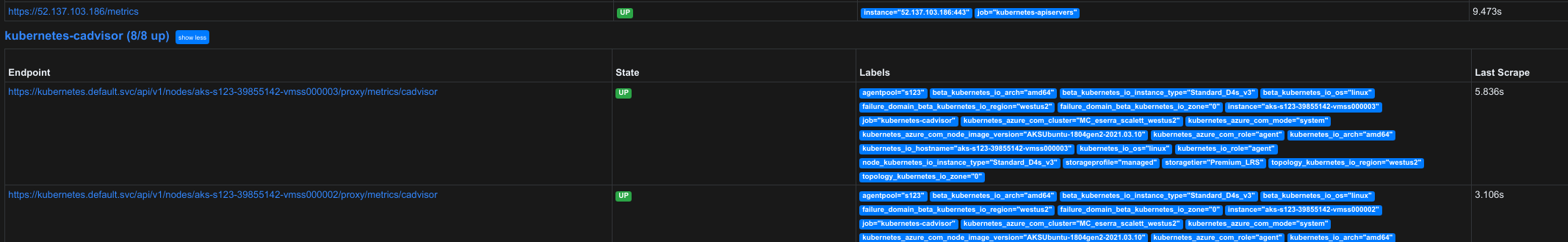

After this step, Prometheus should already be able to scrape the mesh and API endpoints; to verify it is, forward its management port

export POD_NAME=$(kubectl get pods --namespace <promNamespace> -l "app=prometheus,component=server" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace <promNamespace> port-forward $POD_NAME 9090

And followingly access locally through http://localhost:9090/targets.

Here you should see most of the endpoints connected, up and running for scrape.

Deploying a Grafana Instance

Similar to Prometheus, we will deploy a Grafan instance through helm.

helm repo add grafana https://grafana.github.io/helm-charts

helm install stable/grafana --generate-name

Next grab Grafana’s admin password

kubectl get secret --namespace <grafana namespace> <grafana pod> -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

Next forward Grafana’s webadmin port:

export POD_NAME=$(kubectl get pods --namespace <grafana namespace> -l "app.kubernetes.io/name=grafana" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace <namespace> port-forward $POD_NAME 3000

Here use admin as user and the password you got from two steps above.

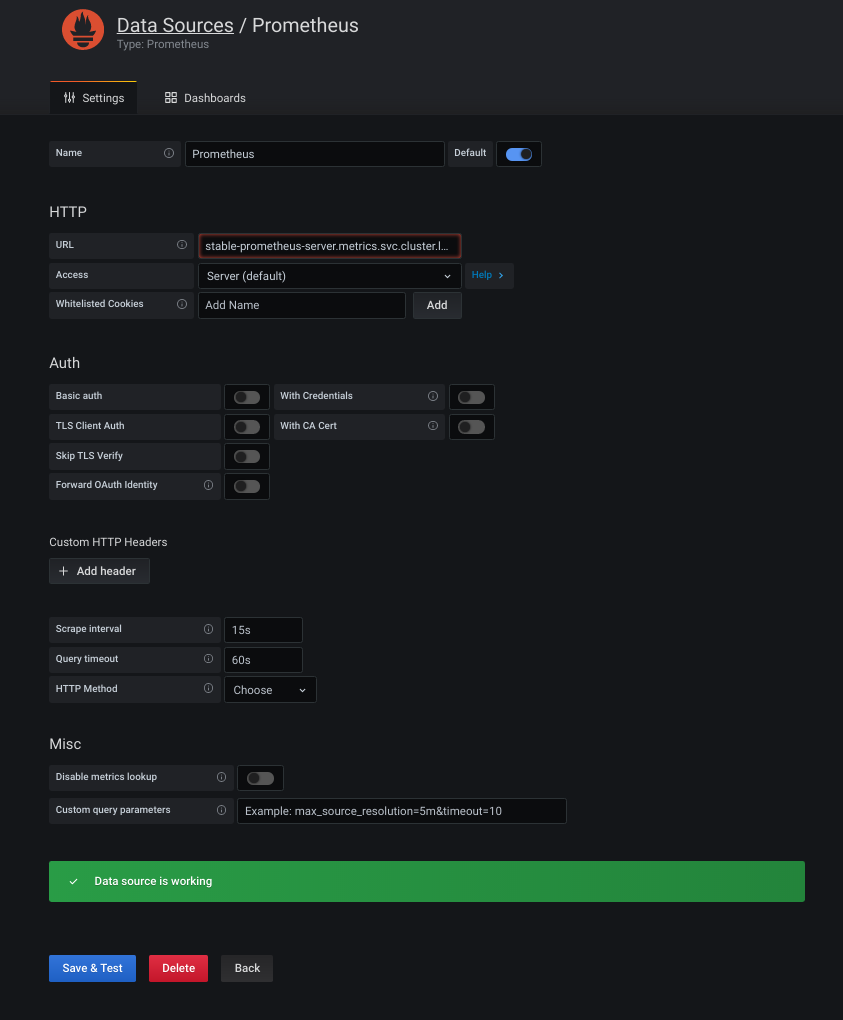

Next step is to to add Prometheus as data sources for Grafana. To do so, naviagte on the menu on the left and look for Data Sources, there select to add a Prometheus data source type.

On the new tab that will open, we just need to point to the Prometheus FQDN from our previous deployment. On our case, it was stable-prometheus-server.metrics.svc.cluster.local - In general this should be of the form of <service-name>.<namespace>.svc.cluster.local>.

Saving and testing at this stage should already be enough, and Grafana should tell you if the connection succeeded.

Importing OSM Dashboards

OSM Dashboards are available both through:

- our repository, and are importable as json blobs through the web admin portal

- or online at Grafana.com

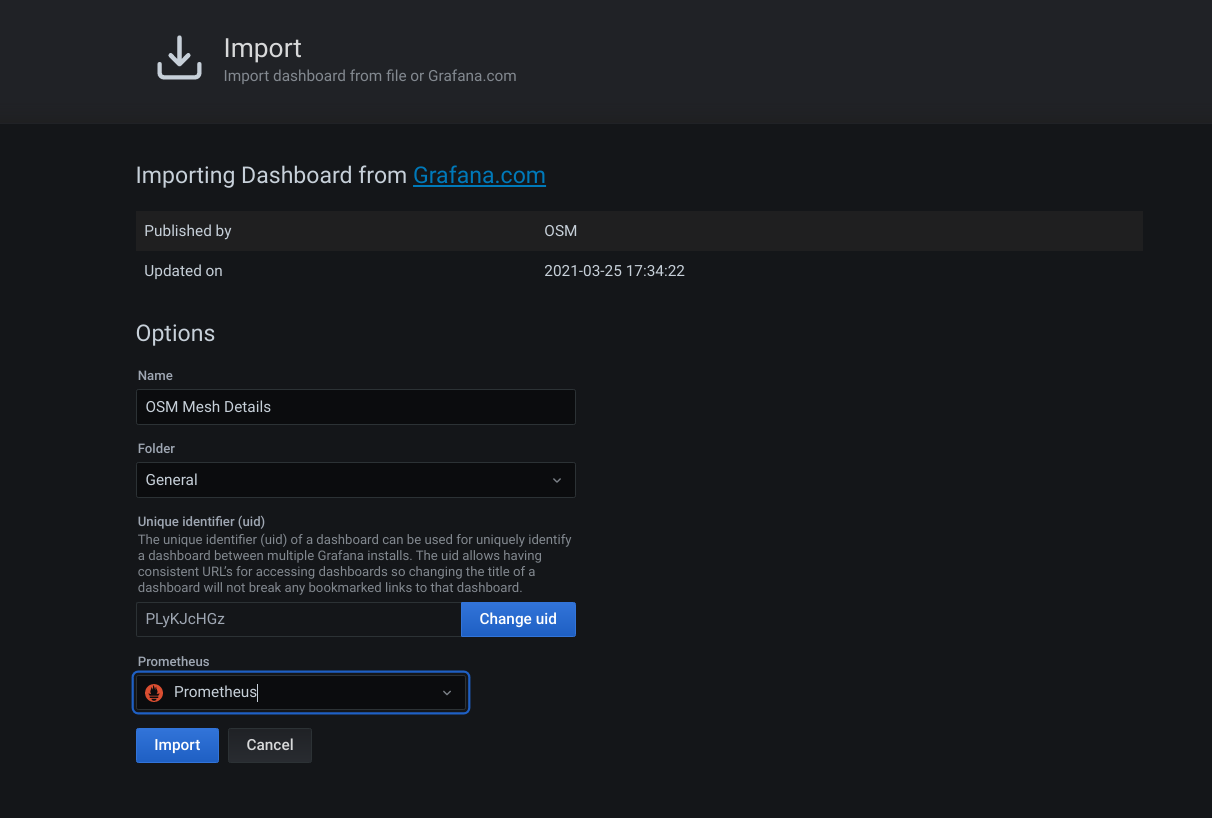

To import a dashboard, look for the + sign on the left menu and select import.

You can directly import dashboard by their ID on Grafana.com. For example, our OSM Mesh Details dashboard uses id 14145, you can use the ID directly on the form and click load:

Last step is to configure Prometheus source for the imported dashboard (bottom of previous screenshot).

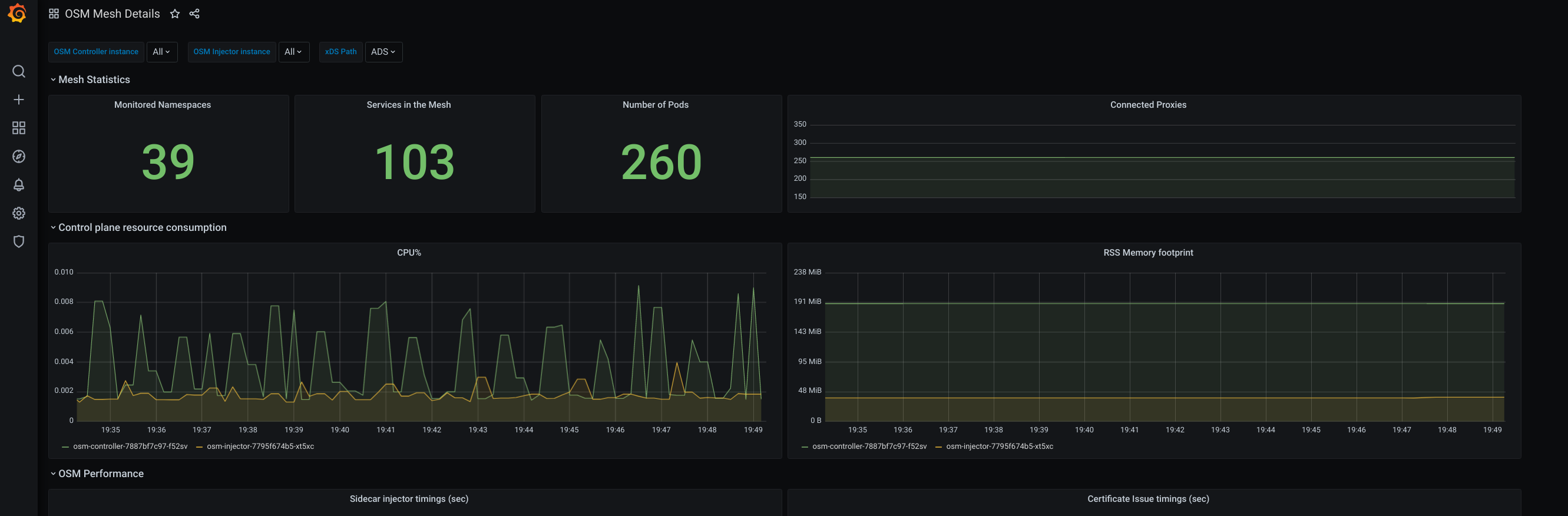

As soon as you click import, it will bring you automatically to your imported dashboard.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.